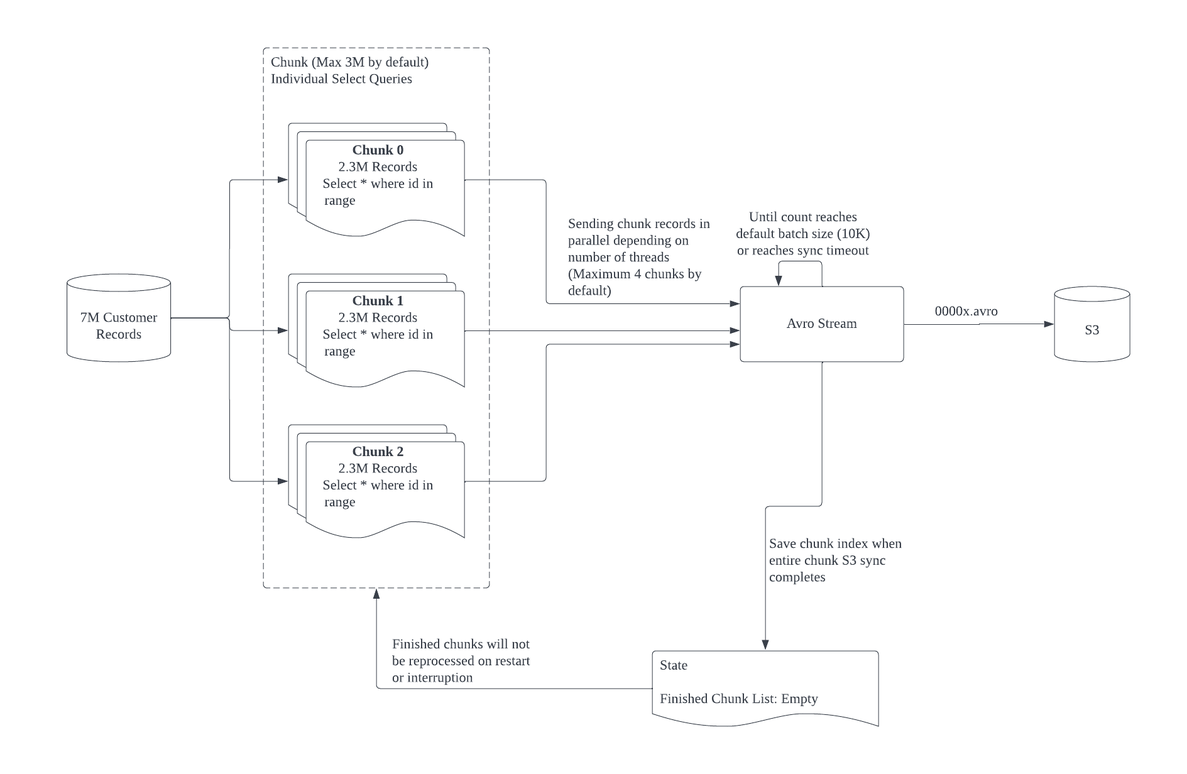

During initial sync, Integrate.io counts the number of records of a table and divide it into chunks equally. A chunk represents a select statement with a range each. Each of these chunks will send continuous row data to Avro Stream in parallel. The Avro Stream will combine these records in a batch. When a batch reaches maximum default size, or sync timeout, it will be sent to S3 in form of an Avro file.

Once all records of a chunk were transferred completely to S3, the whole chunk will be marked as finished and will not be reprocessed again upon restart or interruption.

Note that chunking is only supported on tables with number like primary keys (Integer, Big integer, medium integer).